GLM in R: Generalized Linear Model (with Example)

What is logistic regression?

Logistic regression is used for classification when the target is binary (e.g., 0/1). Instead of predicting a continuous value like linear regression, it models the probability that an observation belongs to the positive class.

Imagine you want to predict whether a loan is rejected or approved based on an applicant’s attributes. We can encode the outcome as \(y \in \{0, 1\}\), where \(y=0\) means “rejected” and \(y=1\) means “approved”.

A logistic regression differs from linear regression in two key ways:

- The dependent variable is binary.

- The model output is transformed through a link function (the sigmoid), which maps any real number to a value between 0 and 1: \[ \sigma(t)=\frac{1}{1+\exp(-t)} \]

The sigmoid returns a probability. To obtain a class label, a common approach is to set a decision threshold (e.g., 0.5): values above the threshold are classified as 1, and values below as 0.

How to fit a GLM (logistic) in R

We will use the Adult dataset to illustrate logistic regression. The goal is to predict whether an individual’s annual income exceeds 50,000 USD.

Import data

library(dplyr)

library(readr)

data_adult <- read_csv(

"raw_data/adult.csv",

name_repair = "universal",

show_col_types = FALSE

) |>

# Make the import robust to possible "X"/"x" index columns

select(-any_of(c("X", "x"))) |>

mutate(across(where(is.character), as.factor))

glimpse(data_adult)## Rows: 48,842

## Columns: 9

## $ age <dbl> 25, 38, 28, 44, 18, 34, 29, 63, 24, 55, 65, 36, 26, 58…

## $ workclass <fct> Private, Private, Local-gov, Private, ?, Private, ?, S…

## $ education <fct> 11th, HS-grad, Assoc-acdm, Some-college, Some-college,…

## $ educational.num <dbl> 7, 9, 12, 10, 10, 6, 9, 15, 10, 4, 9, 13, 9, 9, 9, 14,…

## $ marital.status <fct> Never-married, Married-civ-spouse, Married-civ-spouse,…

## $ race <fct> Black, White, White, Black, White, White, Black, White…

## $ gender <fct> Male, Male, Male, Male, Female, Male, Male, Male, Fema…

## $ hours.per.week <dbl> 40, 50, 40, 40, 30, 30, 40, 32, 40, 10, 40, 40, 39, 35…

## $ income <fct> <=50K, <=50K, >50K, >50K, <=50K, <=50K, <=50K, >50K, <…We will proceed as follows:

- Step 1: Inspect numeric variables

- Step 2: Inspect categorical variables

- Step 3: Feature engineering

- Step 4: Exploratory summaries

- Step 5: Train/test split

- Step 6: Fit the model

- Step 7: Evaluate performance

- Step 8: Improve the model

Your task is to predict which individuals will have an income above 50K.

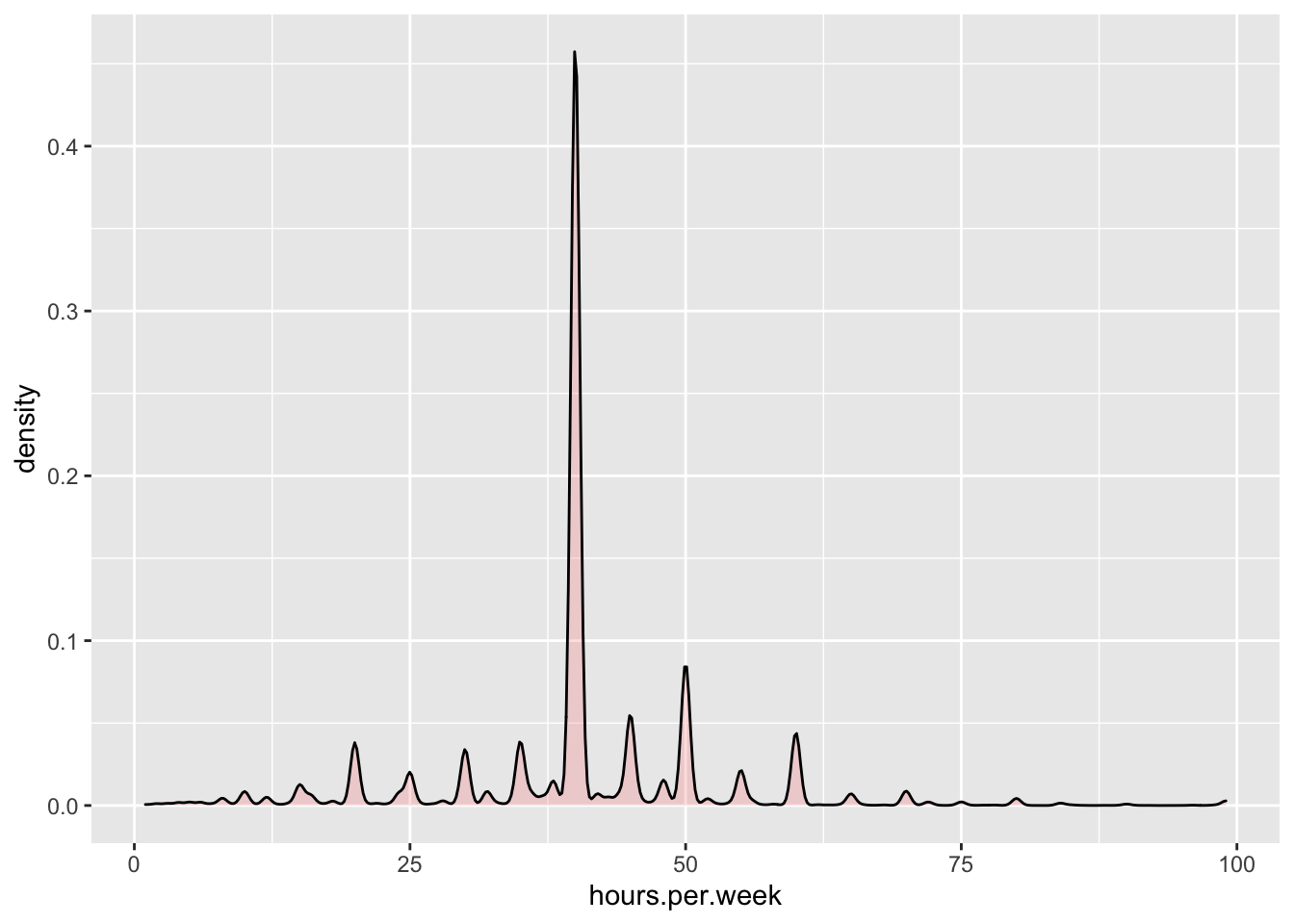

Step 1) Inspect numeric variables

## age educational.num hours.per.week

## Min. :17.00 Min. : 1.00 Min. : 1.00

## 1st Qu.:28.00 1st Qu.: 9.00 1st Qu.:40.00

## Median :37.00 Median :10.00 Median :40.00

## Mean :38.64 Mean :10.08 Mean :40.42

## 3rd Qu.:48.00 3rd Qu.:12.00 3rd Qu.:45.00

## Max. :90.00 Max. :16.00 Max. :99.00From the summary, some variables are on very different scales, and

hours.per.week can contain high values (potential

outliers).

1) Plot the distribution of hours.per.week

library(ggplot2)

ggplot(data_adult, aes(x = hours.per.week)) +

geom_histogram(bins = 40, fill = "#4AA4DE", color = "white", alpha = 0.85) +

labs(x = "Hours per week", y = "Count") +

theme_classic()

2) Cap extreme values (optional) and standardize

A simple way to reduce the influence of extreme values is to remove

the top 1% of hours.per.week (99th percentile).

## 99%

## 80## [1] 48314 9Now standardize numeric variables (z-scores).

Note: this lesson standardizes before splitting for simplicity; in

production, scaling parameters should be learned on the training set and

applied to the test set.

data_adult_rescale <- data_adult_drop |>

mutate(across(where(is.numeric), ~ as.numeric(scale(.x))))

glimpse(data_adult_rescale)## Rows: 48,314

## Columns: 9

## $ age <dbl> -0.99161306, -0.04464061, -0.77308096, 0.39242361, -1.…

## $ workclass <fct> Private, Private, Local-gov, Private, ?, Private, ?, S…

## $ education <fct> 11th, HS-grad, Assoc-acdm, Some-college, Some-college,…

## $ educational.num <dbl> -1.19830033, -0.41917209, 0.74952027, -0.02960797, -0.…

## $ marital.status <fct> Never-married, Married-civ-spouse, Married-civ-spouse,…

## $ race <fct> Black, White, White, Black, White, White, Black, White…

## $ gender <fct> Male, Male, Male, Male, Female, Male, Male, Male, Fema…

## $ hours.per.week <dbl> 0.008216002, 0.885642886, 0.008216002, 0.008216002, -0…

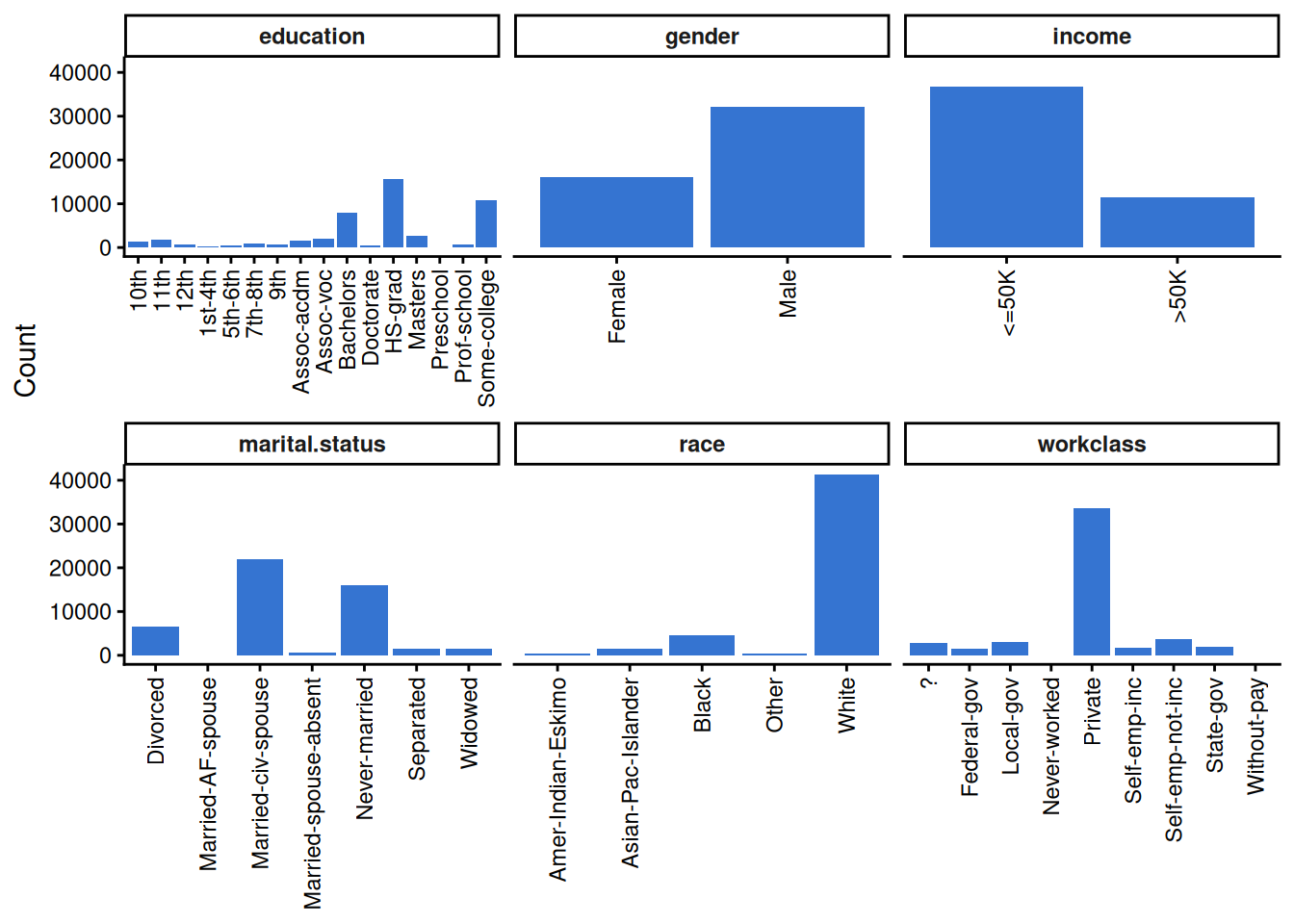

## $ income <fct> <=50K, <=50K, >50K, >50K, <=50K, <=50K, <=50K, >50K, <…Step 2) Inspect categorical variables

This step has two objectives:

- Check the number of levels in each categorical column.

- Identify candidates for level recoding.

## [1] 6Instead of generating one plot per variable with

lapply(), a tidy approach is to reshape to long format and

facet the bar charts.

library(tidyr)

factor_long <- factor_df |>

pivot_longer(cols = everything(), names_to = "variable", values_to = "level")

ggplot(factor_long, aes(x = level)) +

geom_bar(fill = "#1F65CC", alpha = 0.9) +

facet_wrap(~ variable, scales = "free_x") +

theme_classic() +

theme(

axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1),

strip.text = element_text(face = "bold")

) +

labs(x = NULL, y = "Count")

Step 3) Feature engineering

Recode education

The education variable often has many levels. A common

approach is to group them into fewer, more interpretable categories.

recast_data <- data_adult_rescale |>

mutate(

education = case_when(

education %in% c("Preschool", "1st-4th", "5th-6th", "7th-8th", "9th",

"10th", "11th", "12th") ~ "Dropout",

education == "HS-grad" ~ "HighGrad",

education %in% c("Some-college", "Assoc-acdm", "Assoc-voc") ~ "Community",

education == "Bachelors" ~ "Bachelors",

education %in% c("Masters", "Prof-school") ~ "Master",

education == "Doctorate" ~ "PhD",

TRUE ~ as.character(education)

) |>

factor(levels = c("Dropout", "HighGrad", "Community", "Bachelors", "Master", "PhD"))

)Check how educational.num relates to the recoded

groups:

recast_data |>

dplyr::group_by(education) |>

dplyr::summarize(

average_educ_year = mean(educational.num, na.rm = TRUE),

count = n(),

.groups = "drop"

) |>

dplyr::arrange(average_educ_year)| education | average_educ_year | count |

|---|---|---|

| Dropout | -1.7365624 | 6340 |

| HighGrad | -0.4191721 | 15608 |

| Community | 0.1111341 | 14396 |

| Bachelors | 1.1390844 | 7968 |

| Master | 1.6192475 | 3427 |

| PhD | 2.3077767 | 575 |

Recode marital.status

recast_data <- recast_data |>

mutate(

marital.status = case_when(

marital.status %in% c("Never-married", "Married-spouse-absent") ~ "Not_married",

marital.status %in% c("Married-AF-spouse", "Married-civ-spouse") ~ "Married",

marital.status %in% c("Separated", "Divorced") ~ "Separated",

marital.status == "Widowed" ~ "Widowed",

TRUE ~ as.character(marital.status)

) |>

factor()

)

table(recast_data$marital.status)##

## Married Not_married Separated Widowed

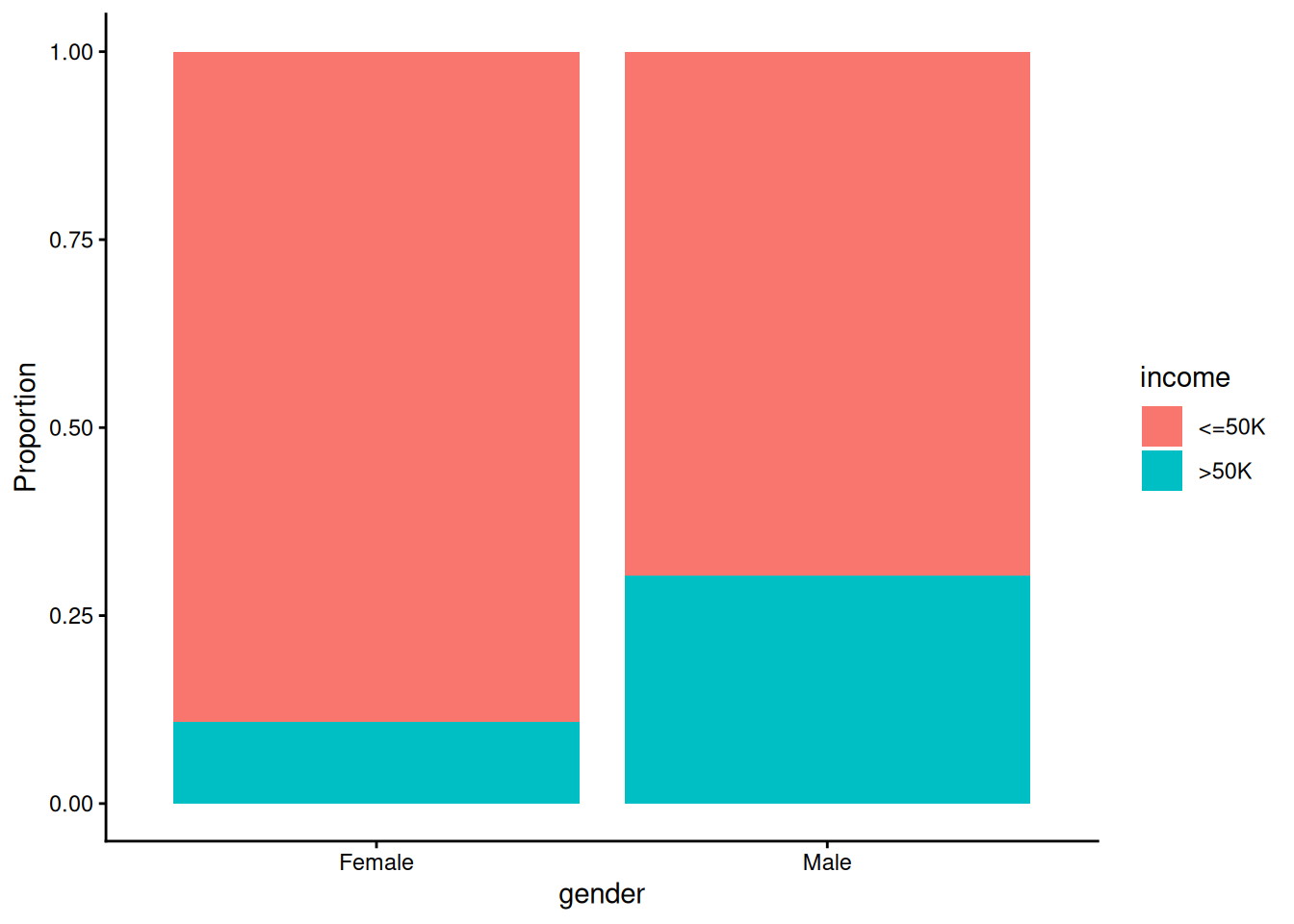

## 22085 16638 8084 1507Step 4) Exploratory summaries

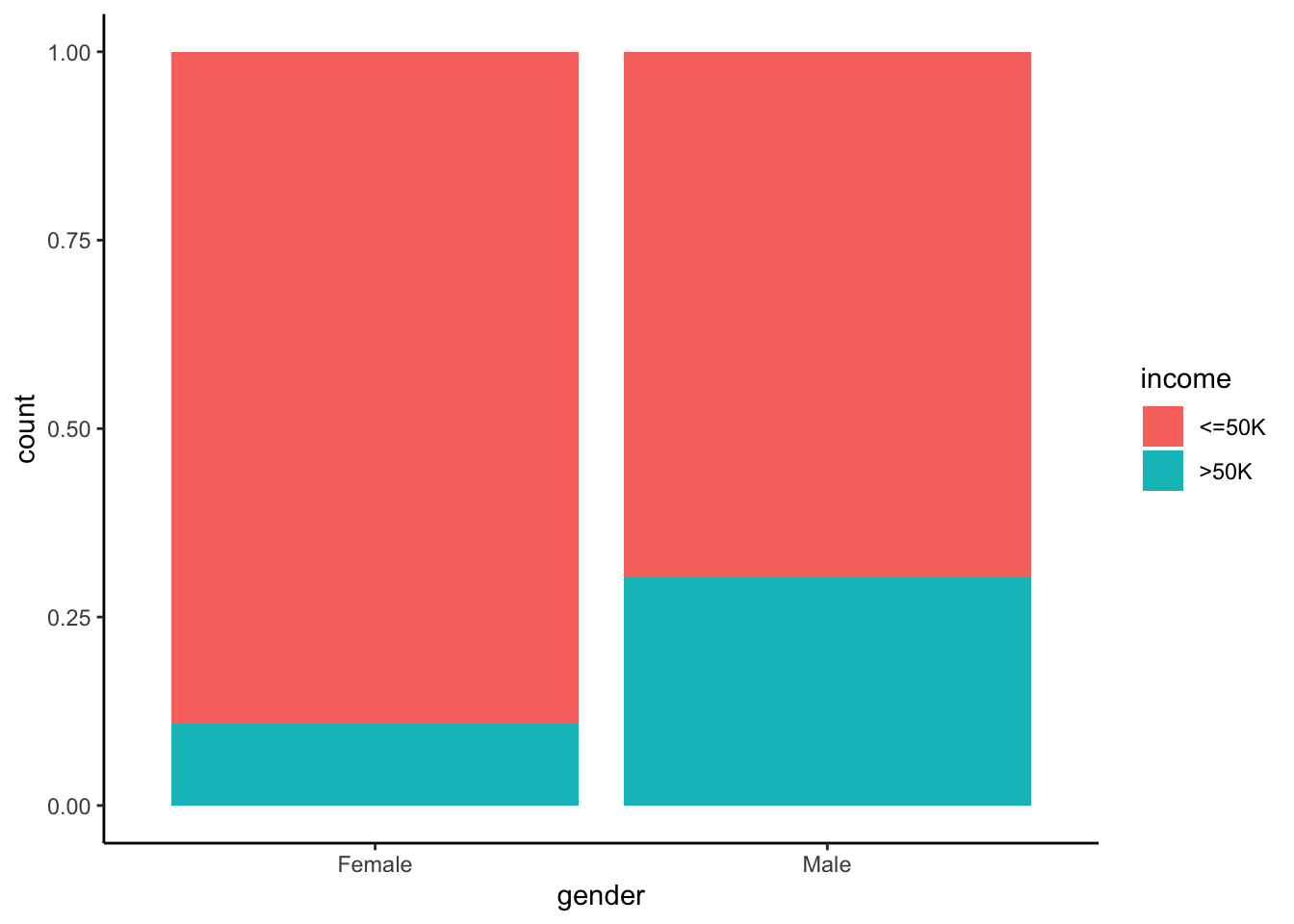

Income distribution by gender

ggplot(recast_data, aes(x = gender, fill = income)) +

geom_bar(position = "fill") +

theme_classic() +

labs(y = "Proportion")

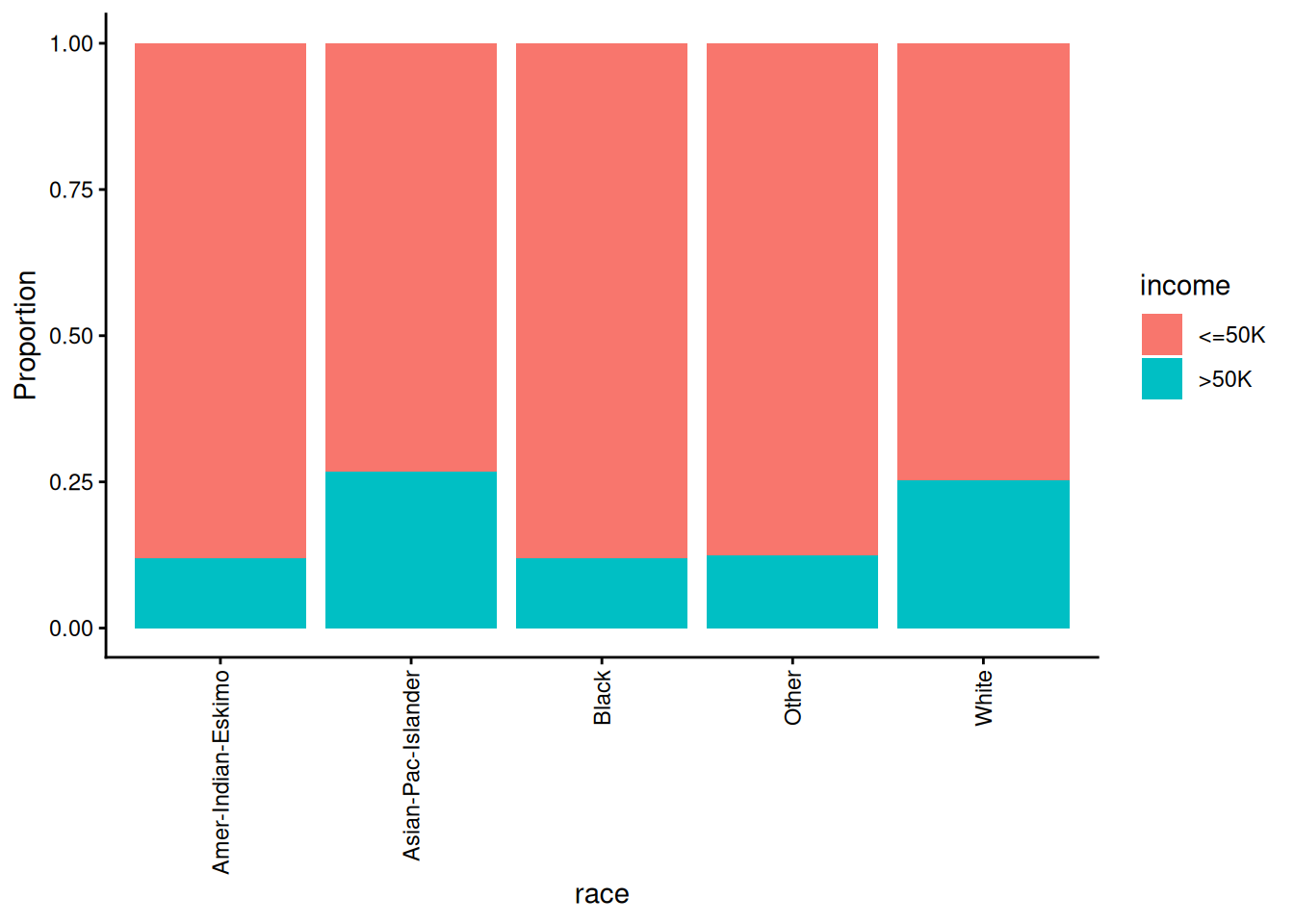

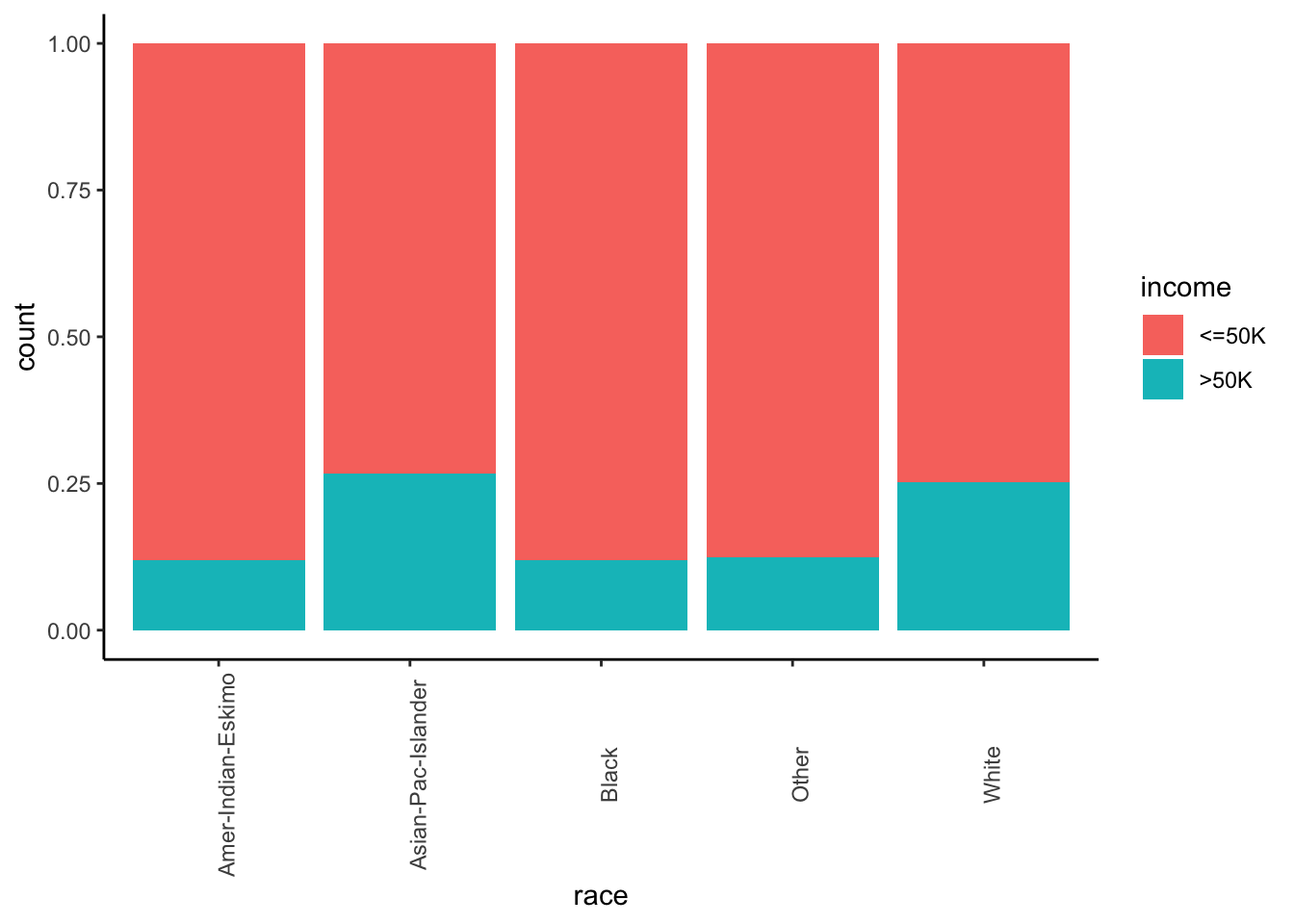

Income distribution by race

ggplot(recast_data, aes(x = race, fill = income)) +

geom_bar(position = "fill") +

theme_classic() +

theme(axis.text.x = element_text(angle = 90, vjust = 0.5, hjust = 1)) +

labs(y = "Proportion")

Hours per week by gender

ggplot(recast_data, aes(x = gender, y = hours.per.week)) +

geom_boxplot() +

stat_summary(fun = mean, geom = "point", size = 3, color = "steelblue") +

theme_classic()

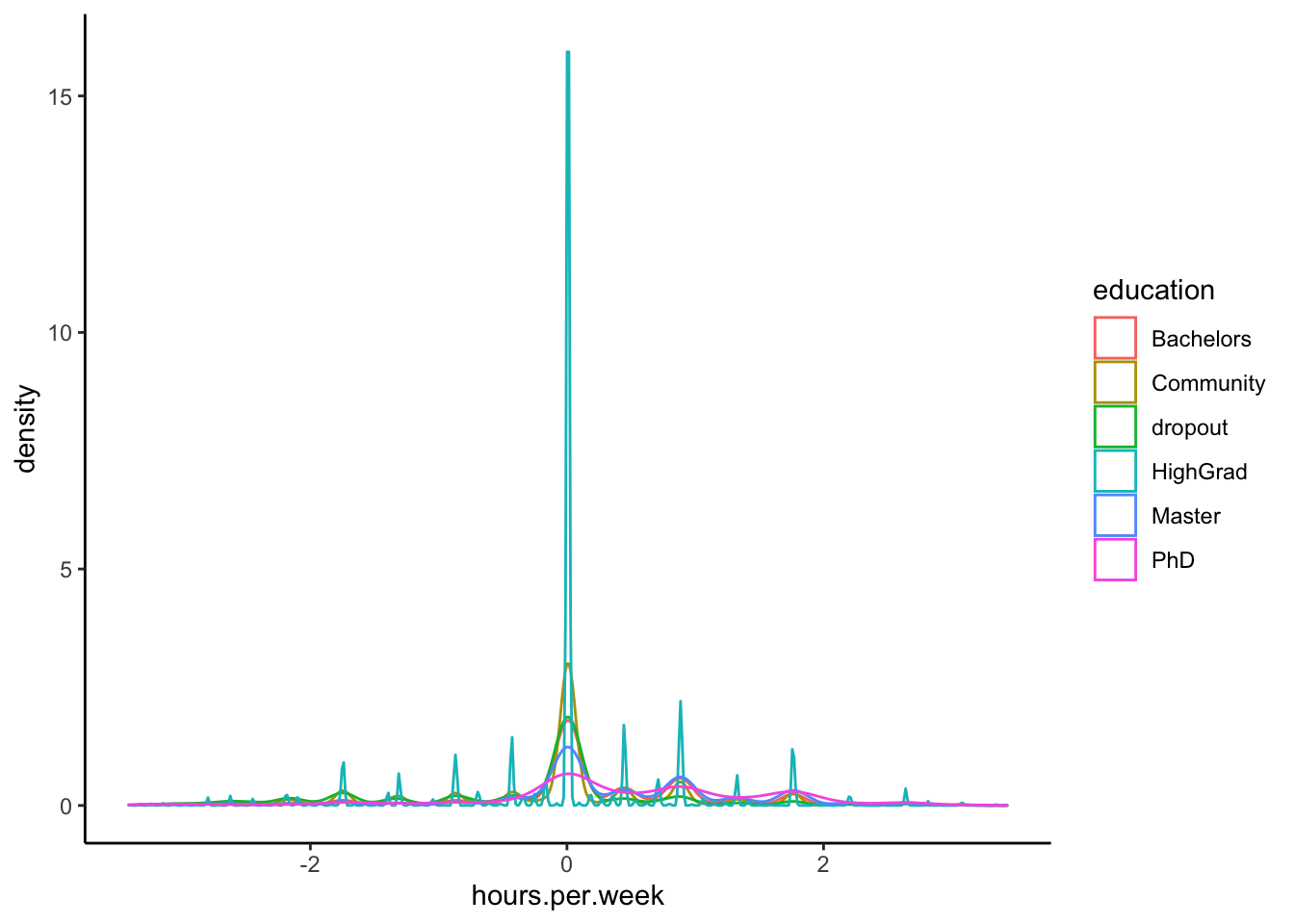

Hours per week density by education

ggplot(recast_data, aes(x = hours.per.week, color = education)) +

geom_density(alpha = 0.25) +

theme_classic()

A quick one-way ANOVA can test differences in average working hours across education groups:

## Df Sum Sq Mean Sq F value Pr(>F)

## education 5 1637 327.5 338.9 <2e-16 ***

## Residuals 48308 46676 1.0

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Non-linearity check: age vs hours.per.week

ggplot(recast_data, aes(x = age, y = hours.per.week, color = income)) +

geom_point(size = 0.5, alpha = 0.6) +

geom_smooth(method = "lm", formula = y ~ poly(x, 2), se = TRUE) +

theme_classic()

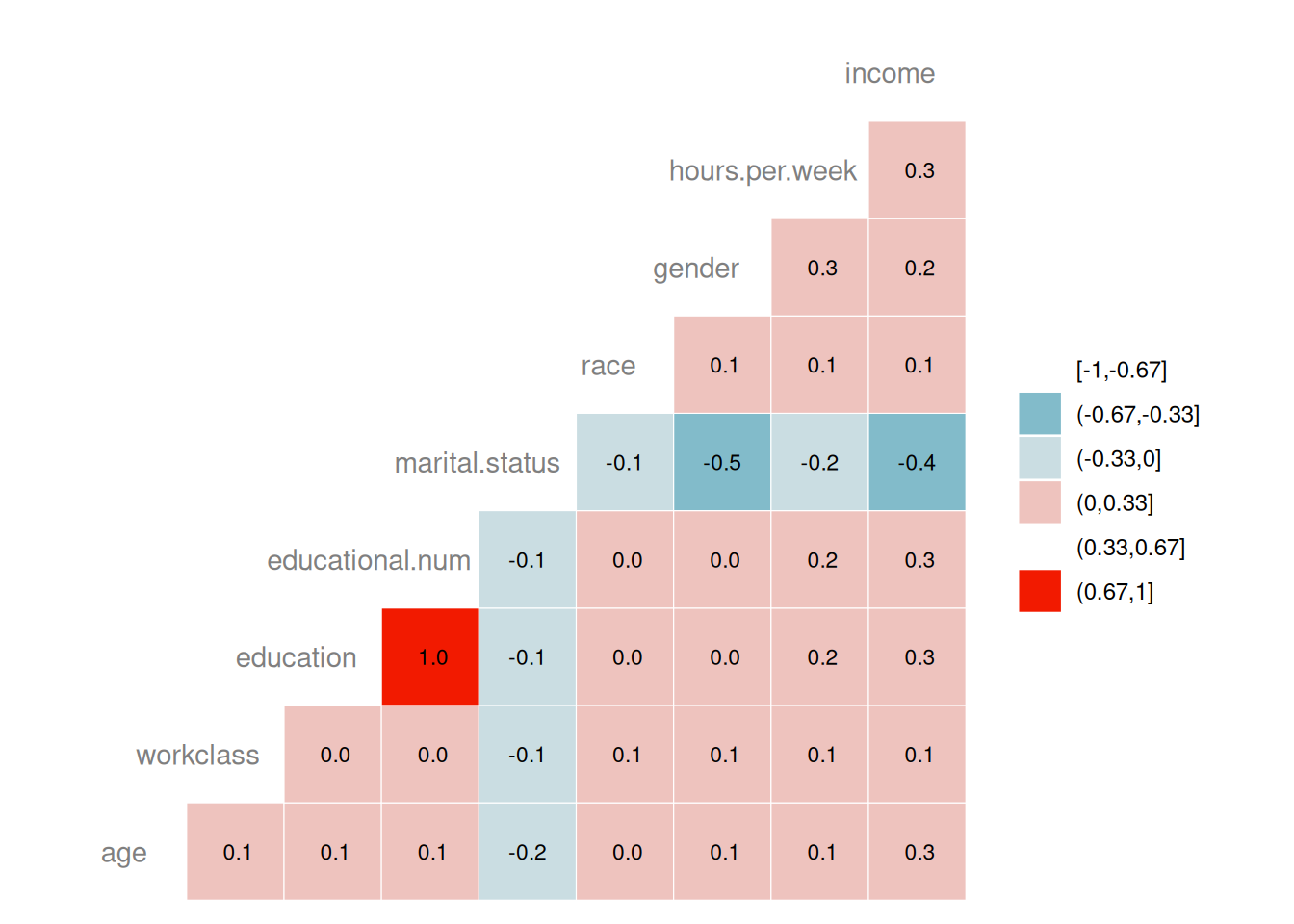

Correlation heatmap (quick & approximate)

This converts factors to integer codes to compute a Spearman correlation matrix. Treat this as exploratory only.

library(GGally)

corr_df <- recast_data |>

mutate(across(where(is.factor), ~ as.integer(.x)))

ggcorr(

corr_df,

method = c("pairwise", "spearman"),

nbreaks = 6,

hjust = 0.8,

label = TRUE,

label_size = 3,

color = "grey50"

)

Step 5) Train/test split

A supervised learning task requires splitting into train and test sets. Here we do a random split (80/20).

set.seed(1234)

n <- nrow(recast_data)

idx_train <- sample.int(n, size = floor(0.8 * n))

data_train <- recast_data[idx_train, ]

data_test <- recast_data[-idx_train, ]

dim(data_train)## [1] 38651 9## [1] 9663 9Step 6) Fit the model (GLM logistic regression)

##

## Call:

## glm(formula = income ~ ., family = binomial(link = "logit"),

## data = data_train)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -2.273e+00 2.400e-01 -9.470 < 2e-16 ***

## age 4.259e-01 1.888e-02 22.558 < 2e-16 ***

## workclassFederal-gov 1.321e+00 1.182e-01 11.174 < 2e-16 ***

## workclassLocal-gov 6.912e-01 1.049e-01 6.587 4.48e-11 ***

## workclassNever-worked -5.485e+00 7.233e+01 -0.076 0.939554

## workclassPrivate 8.009e-01 9.182e-02 8.722 < 2e-16 ***

## workclassSelf-emp-inc 1.237e+00 1.135e-01 10.900 < 2e-16 ***

## workclassSelf-emp-not-inc 2.447e-01 1.021e-01 2.397 0.016508 *

## workclassState-gov 4.950e-01 1.160e-01 4.266 1.99e-05 ***

## workclassWithout-pay -1.192e+00 1.094e+00 -1.090 0.275666

## educationHighGrad 3.981e-01 1.133e-01 3.514 0.000442 ***

## educationCommunity 6.799e-01 1.456e-01 4.669 3.03e-06 ***

## educationBachelors 1.070e+00 2.099e-01 5.097 3.45e-07 ***

## educationMaster 1.435e+00 2.445e-01 5.871 4.32e-09 ***

## educationPhD 1.482e+00 3.127e-01 4.740 2.13e-06 ***

## educational.num 5.684e-01 6.947e-02 8.182 2.79e-16 ***

## marital.statusNot_married -2.565e+00 5.138e-02 -49.910 < 2e-16 ***

## marital.statusSeparated -2.148e+00 5.350e-02 -40.157 < 2e-16 ***

## marital.statusWidowed -2.278e+00 1.209e-01 -18.850 < 2e-16 ***

## raceAsian-Pac-Islander 2.912e-02 1.938e-01 0.150 0.880573

## raceBlack 2.779e-02 1.836e-01 0.151 0.879683

## raceOther -2.603e-05 2.675e-01 0.000 0.999922

## raceWhite 2.790e-01 1.744e-01 1.600 0.109697

## genderMale 6.100e-02 4.249e-02 1.436 0.151131

## hours.per.week 4.422e-01 1.749e-02 25.275 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 42409 on 38650 degrees of freedom

## Residual deviance: 27886 on 38626 degrees of freedom

## AIC: 27936

##

## Number of Fisher Scoring iterations: 10You can access metrics directly from the fitted model. For example:

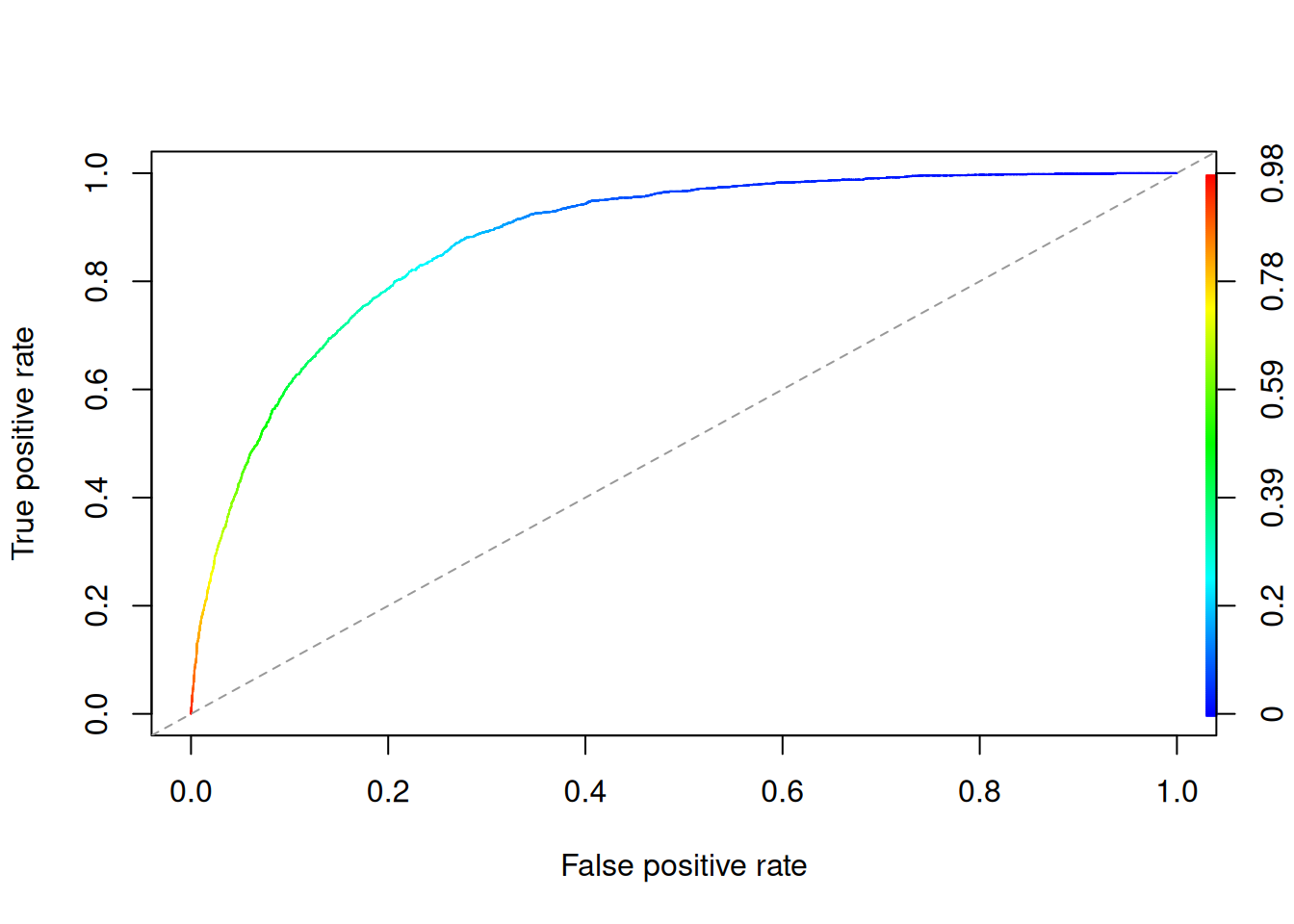

## [1] 27935.81Step 7) Evaluate performance

Confusion matrix (threshold = 0.5)

prob <- predict(logit, newdata = data_test, type = "response")

# Make predictions as factor with same levels as the truth

pred_class <- ifelse(prob > 0.5, ">50K", "<=50K") |>

factor(levels = levels(data_test$income))

cm <- table(truth = data_test$income, estimate = pred_class)

cm## estimate

## truth <=50K >50K

## <=50K 6825 528

## >50K 1107 1203Accuracy, precision, recall, F1

metrics_from_cm <- function(cm, positive = ">50K", negative = "<=50K") {

tp <- cm[positive, positive]

tn <- cm[negative, negative]

fp <- cm[negative, positive]

fn <- cm[positive, negative]

accuracy <- (tp + tn) / sum(cm)

precision <- tp / (tp + fp)

recall <- tp / (tp + fn)

f1 <- 2 * (precision * recall) / (precision + recall)

c(accuracy = accuracy, precision = precision, recall = recall, f1 = f1)

}

metrics_from_cm(cm)## accuracy precision recall f1

## 0.8307979 0.6949740 0.5207792 0.5953972Step 8) Improve the model (interaction terms + model comparison)

A simple improvement is to add interaction terms (e.g.,

age:hours.per.week and gender:hours.per.week)

and compare models with a likelihood ratio test.

logit_2 <- glm(

income ~ . + age:hours.per.week + gender:hours.per.week,

data = data_train,

family = binomial(link = "logit")

)

anova(logit, logit_2, test = "Chisq")| Resid. Df | Resid. Dev | Df | Deviance | Pr(>Chi) |

|---|---|---|---|---|

| 38626 | 27885.81 | NA | NA | NA |

| 38624 | 27871.27 | 2 | 14.53429 | 0.0006981 |

Now compare performance on the test set:

prob_2 <- predict(logit_2, newdata = data_test, type = "response")

pred_class_2 <- ifelse(prob_2 > 0.5, ">50K", "<=50K") |>

factor(levels = levels(data_test$income))

cm_2 <- table(truth = data_test$income, estimate = pred_class_2)

cm_2## estimate

## truth <=50K >50K

## <=50K 6821 532

## >50K 1098 1212## accuracy precision recall f1

## 0.8313153 0.6949541 0.5246753 0.5979280Summary

- Logistic regression models the probability of a binary outcome using a sigmoid link.

- In R, logistic regression is fitted using

glm(..., family = binomial(link = "logit")). - For imbalanced classes, accuracy alone can be misleading; precision, recall, F1 and the ROC curve provide a clearer picture.

A work by Gianluca Sottile

gianluca.sottile@unipa.it